A former competitor of Nvidia, the major manufacturer of AI-specific chips, was neuroscientist-turned-tech entrepreneur Naveen Rao.

Mr. Rao worked on chips to replace Nvidia’s graphics processing units, which are components optimised for artificial intelligence applications like machine learning, at a startup that the semiconductor giant Intel eventually acquired. But Mr. Rao said that while Intel was reluctant to act, Nvidia was quick to improve its products with new artificial intelligence technologies that opposed what he was producing.

Mr. Rao utilised Nvidia’s processors and compared them to those of competitors when he left Intel to manage a software startup, MosaicML. He discovered that Nvidia stood out from the competition beyond its processors by cultivating a sizable group of artificial intelligence (AI) developers who regularly make innovative use of the company’s hardware.

Nvidia has been in the forefront of developing CPUs for advanced artificial intelligence applications like image, face, and voice recognition and chatbots like ChatGPT for more than a decade. The once-unknown company has become dominant in its field by anticipating the rise of artificial intelligence (AI), designing chips specifically for AI-related activities, and writing essential AI-related software.

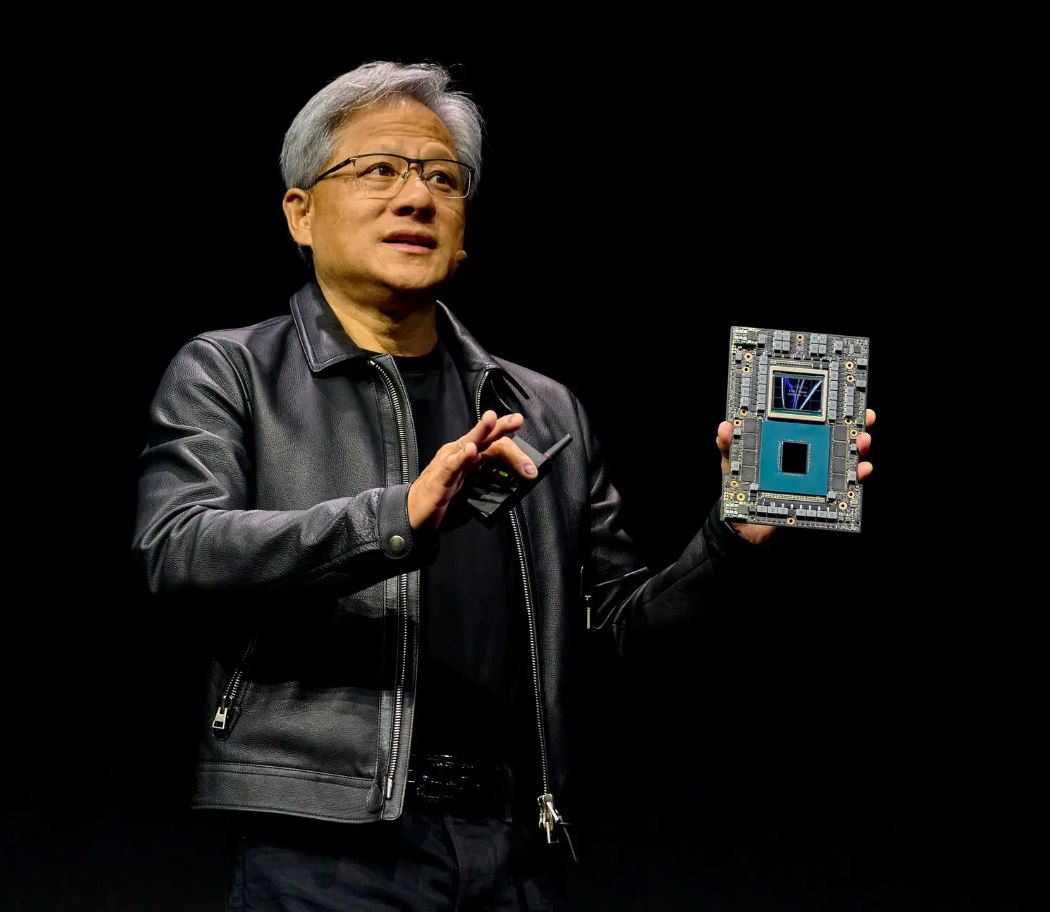

Since then, Nvidia co-founder and current CEO Jensen Huang has consistently set new benchmarks. His firm has stayed at the forefront of its industry by providing its clients with cutting-edge technology, including access to specialised computers, computing services, and other cutting-edge tools. Because of this, Nvidia is now essentially a one-stop shop for artificial intelligence research and development.

While other companies like Google, Amazon, Meta, IBM, and others have created A.I. processors, Omdia reports that Nvidia now accounts for over 70% of A.I. chip sales and has an even larger position in training generative A.I. models.

Mr. Huang co-founded Nvidia in 1993 to provide video game graphics processing units. The company’s GPUs are able to accomplish numerous basic jobs simultaneously, in contrast to traditional microprocessors, which excel at executing complicated computations sequentially.

In 2006, Mr. Huang went much farther with it. He introduced CUDA, a software platform that allowed GPUs to be reprogrammed for new tasks, transforming them from specialised processors into those able to handle a wider variety of activities in areas such as physics and chemical simulations.

In response, Mr. Jensen recently said in a graduating address at National Taiwan University that Nvidia is dedicating “every aspect of our company to advance this new field.”

Nvidia is now more than just a component provider thanks to this initiative, which the firm estimates has cost more than $30 billion over the last decade. The firm has assembled a team that takes part in A.I. activities including the development and training of language models, in addition to engaging with top scientists and start-ups.

Nvidia foresaw the needs of the artificial intelligence (AI) industry and developed many layers of essential software beyond CUDA to meet them. Libraries, which are collections of code already written to make developers’ lives easier, numbered in the hundreds.

Nvidia is well-known in the hardware industry for regularly releasing faster CPUs every two years. It began adjusting GPUs in 2017 so they could do certain artificial intelligence computations.

Nvidia has recently invested money and scarce H100s in fledgling cloud services like CoreWeave, which provide businesses the option to rent computing resources rather than purchase them outright. CoreWeave, which will run Inflection’s technology, has acquired an additional $2.3 billion in debt this month to enable it to purchase even more Nvidia processors.

However, industry leaders and experts have estimated a price range of $15,000 to more than $40,000 per H100, depending on packaging and other considerations, which is around two to three times as much as the price of the preceding A100 chip.

Nonetheless, there will inevitably be greater rivalry. Mr. Rao, whose startup was recently acquired by the data and A.I. firm DataBricks, mentioned a GPU manufactured by Advanced Micro Devices as one of the most promising competitors in the race.