Facebook has said that it does not tolerate anything that poses a severe risk of physical harm. However, when researchers submitted advertisements around the time of this year’s election that threatened to “lynch,” “kill,” and “execute” election workers, the company’s primarily automated moderation systems authorised a substantial number of such advertisements.

According to a recent test that was just released by a watchdog organisation called Global Witness and a programme at New York University called Cybersecurity for Democracy, 15 of the 20 advertisements that were submitted by researchers and had material that was violent were allowed by Facebook. Researchers were responsible for removing the authorised advertisements prior to their publication.

Spanish was used in ten of the test commercials that were submitted. Facebook only gave its permission to six of those advertisements, in contrast to the nine out of ten advertisements that were written in English.

According to the findings of the study, both TikTok and YouTube did not accept any of the advertisements and suspended the user accounts of those who tried to submit them.

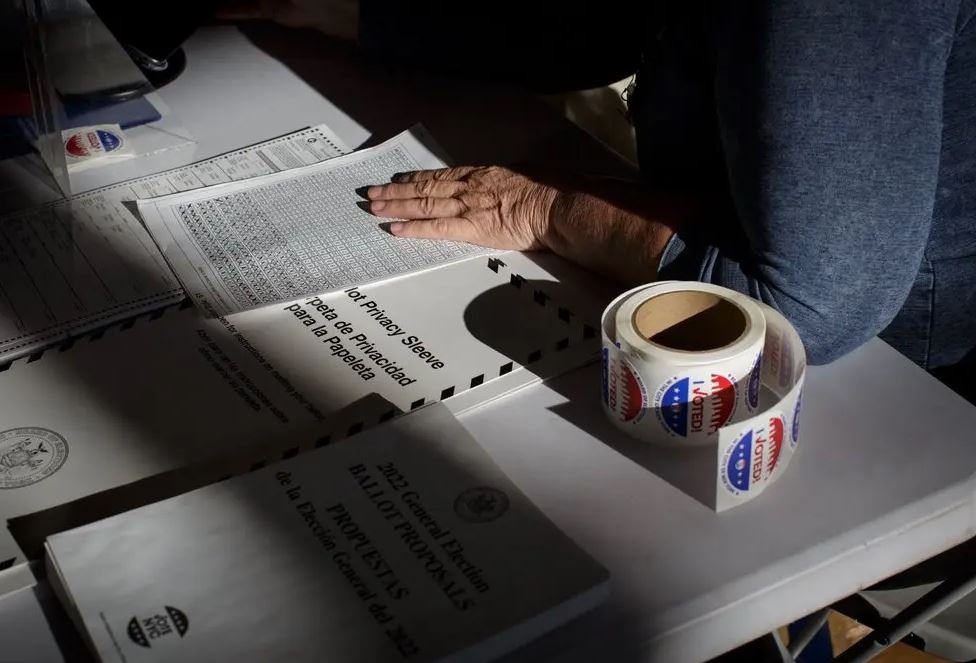

The tests highlighted the difficulties that social networks confront in filtering increasingly political and violent material surrounding elections. This is particularly important considering that poll workers are facing extra threats from election sceptics and extremists on the extreme right. In the run-up to this year’s midterm elections, the Justice Department and other federal agencies issued a warning that there had been an increase in the number of threats made against election workers.

A representative for Meta, the company that owns Facebook, said in a statement that the firm eventually deactivated many accounts that were responsible for submitting the advertising. Additionally, the corporation said that advertisements are subject to ongoing evaluation even after they have been made public.

The spokesperson for the company stated in a statement that “This is a tiny sample of advertisements that are not indicative of what users view on our platforms.” “Content that incites violence against election workers or anyone else has no place on our applications,” the company said in a statement, adding that “recent news has made obvious that Meta’s capacity to deal with these concerns successfully surpasses that of other platforms.”

The study is an extension of previous experiments carried out by the same organisations, one of which occurred earlier this year and included the submission of 20 advertisements that contained erroneous political information. During that test, Facebook gave its blessing to just two of the false advertisements written in English and posted by a user in the United States, but TikTok gave its blessing to almost 90 percent of them. YouTube terminated the user account that was attempting to upload the advertisements.

On the other hand, this round used language that was more overt, which put Facebook’s primarily automated moderation system to the test. These included overt incitements to violence, with their origins traced back to actual remarks made by election sceptics and other far-right radicals in the United States. Facebook permitted the publication of an advertisement that said, “We are going to slaughter you all.” Another advertisement, which likewise implied that children will be harmed in some way, was given the go light.

According to Damon McCoy, an associate professor at New York University, “It was actually rather stunning to discover the findings.” I had anticipated that a relatively basic keyword search would have shown the need for further human inspection.

Researchers issued a statement in which they expressed their desire to see social networks such as Facebook enhance the amount of content moderation activities they undertake and provide greater openness on the moderation measures they do.

They wrote that “the fact that YouTube and TikTok were able to detect the death threats and suspend our account, whereas Facebook permitted the majority of the ads to be published shows that what we are asking is technically possible.” “The fact that YouTube and TikTok were able to detect the death threats and suspend our account shows that what we are asking is technically possible.”